Code Snippets

This section is for small snippets of code various projects I have completed over the years. Most being just one function from a file.

std::map<std::string, std::string> BFS(std::string startNode, Graph g){

std::queue<std::string> visitOrder;

std::map<std::string, bool> explored;

std::map<std::string, std::string> Pi;

visitOrder.push(startNode);

explored[startNode] = true ;

while (!visitOrder.empty()){

std::string v= visitOrder.front();

visitOrder.pop();

std::vector<std::pair<std::string, int>> adjNodes = g.neighbors(v);

for (int i = 0; i < adjNodes.size(); ++i){

std::string n = adjNodes[i].first;

if (explored[n]!= true){

visitOrder.push(n);

explored[n] = {true};

if (Pi[n].empty()){

Pi[n]=v;

}

}

}

}

return Pi;

}

struct comparator{

bool operator()(std::pair<std::string, int> x, std::pair<std::string, int> y){

return x.second> y.second;

}

};

std::map<std::string, std::string> DSP(std::string startNode, Graph g){

//holds the order of who to visit next

std::priority_queue<std::pair<std::string, int>, std::vector<std::pair<std::string, int>>, comparator> visitOrder;

std::map<std::string, int> distance; //holds node and its distance from start to current node

std::map<std::string, std::string> cloud; //holds just like pi (just the nodes)

//set distance of startNode to 0 and all others to infinity (MAX_INT)

distance[startNode]= 0;

std::vector<std::string> nodes = g.nodes();

for(int i=0; i < nodes.size(); ++i){

std::string node = nodes[i];

if(node!= startNode)

distance[node]= INT_MAX;

}

//put startNode in queue and cloud

visitOrder.push(std::make_pair(startNode, 0));

cloud[startNode]= "nullParent";

//until queue is empty iterate

while(!visitOrder.empty()){

//set v to be the current node by popping it out of the queue

int z = visitOrder.top().second;

std::string v = visitOrder.top().first;

visitOrder.pop();

if (z <= distance[v] ){

//get the neighbors and iterate through them

std::vector<std::pair<std::string, int>> adjNodes = g.neighbors(v);

for (int i = 0; i < adjNodes.size(); ++i){

//make string from the first element in pair (name of node)

std::string n = adjNodes[i].first;

//set the distance of the node to the correct distance between it and startNode (is's value + z)

int pathDistance = adjNodes[i].second + z;

//if the cloud for this node is empty or if the distance set in there is smaller than

//the distance to n from v and is not the startNode, put it in the cloud

if (pathDistance < distance[n]){

//put in queue

visitOrder.push(std::make_pair(n, pathDistance));

distance[n] = pathDistance;

cloud[n] = v;

}

}

}

}

return cloud;

}

Djikstra's Algorithm vs BFS Functions

This project was created for Honor's credit in my Algorithms class.

It takes randomly generated graphs of increasing size and runs them through Djikstra's search and Breadth-First Search and records the time for each and prints it to the console for comparison. Written in Visual Studios, Language: C++

Feed-Forward Neural Network: Back Propagation Function

From a project where I had to create a Feed-Forward Neural Network that would sort information. This particular function is where learning via back-propagation is taking place. It is one of the most intense and challenging functions to get working in the entire project, but it is also one of the most essential functions, as this is where the NN learns from the previous go through and adjusts the edge weights to reflect the learning it has done. Written in Visual Studio, Language: C++

//BackPropagation is incharge of recomputing weights and values based on the output (learning from previous values)

void NeuralNetwork::backPropogation(){

//variables and containers for poth parts

double oldWeight; //holds value of old weight for a given matrix index at row r and column c

double dVal; //holds value for the change in weight for a given index in matrix

double newVal; //Holds the computed new value for a given index in the newWeights matrix

vector<MatrixForNN *> updatedWeights; //this vector stores the updated weights for the NN that are found via back propogation

MatrixForNN *deltaW; //holds the change in weight

MatrixForNN *gradients; //holds the change in gradients

MatrixForNN *L; //holds activated values of current left layer

MatrixForNN *newWeights; //holds values of the new weights computed using deltaW and other values

//============================================================

//PART 1 of back propogation: output layer-> last hidden layer

//Since it is the output layer involved, it has to be treated slightly different than if it was just hidden layers

//variables and weights for Part 1 only

int outIndex = mapOfNN.size() - 1; //get output layer's index

double error; // Value of how much error was made

double output; //the actual output value

double gradient; //the gradient value (found by: error*output)

MatrixForNN *derivedVals; //value of derived outputs at output layer

MatrixForNN *Gt; //transposed gradients matrix (used for finding delta weights)

// create a matrix for the change in weights, a matrix of gradients, and a matrix of derived values

//create the new matrix for gradients

//gradient is 1 row and columns = the amount of nodes in output layer

gradients = new MatrixForNN(1, mapOfNN[outIndex], false);

//need derived values at the output layer

//create a new matrix for derived values

derivedVals = layersOfNN[outIndex]->calculateMatrixDerived();

//to get the gradients: G=derivedValues*derivedErrors (by column index)

for (int c = 0; c < mapOfNN[outIndex]; ++c) {

error = derivedErrorValues[c];

output = derivedVals->getOneMatrixValue(0, c);

gradient = error * output;

gradients->setMatrixValue(0, c, gradient);

}

//to get the gradient weights, it is a transpose of the actual gradients with the output of the last hidden layer

//delta values =transpose(Gt* L), where Gt is the transpose of gradients and L is the last hidden layer's output

//the transpose of (Gt*L) is done later in the loop

L = layersOfNN[outIndex - 1]->calculateMatrixActivated();

Gt = gradients->transposeMatrix();

//create new matrix for the delta weights

deltaW = new MatrixForNN(Gt->getNumberOfRows(), L->getNumberOfColumns(), false);

MatrixMultiplication(Gt, L, deltaW);

//Now we can start working towards figuring out the new weights that should be applied to the edges between the hidden layer and the output layer

//rows = same as last hidden layer, columns = same as the output layer, values are set by these two values, so we do not want randomizing

newWeights = new MatrixForNN(mapOfNN[outIndex-1], mapOfNN[outIndex],false);

//now set the new weights by looping through rows of hidden layer and columns of output layer

for (int r = 0; r < mapOfNN[outIndex - 1]; ++r) {

for (int c = 0; c < mapOfNN[outIndex]; ++c) {

//set values of the new weights by taking the old weight values, delta values, learning rate, and momentum

//new weight = (oldweight*momentum)-(learning rate* delta value)

oldWeight = allWeightMatrices[outIndex - 1]->getOneMatrixValue(r, c);

dVal = deltaW->getOneMatrixValue(c, r); //acts as the transpose(gt*L) by switching colum and row element by element

oldWeight = momentum * oldWeight;

dVal = rateOfLearning * dVal;

newVal = oldWeight - dVal;

newWeights->setMatrixValue(r, c, newVal);

}

}

updatedWeights.push_back(new MatrixForNN(*newWeights));

//delete values that we need to reinstantiate to (don't want floating pointers)

delete L;

delete deltaW;

delete newWeights;

//===================================================================================

//part 2 of back propogation last hidden layer -> input layer

// variables and containers used in part two of back propogation

double grad; //value of gradient at a given index in matrix (value is recomputed)

MatrixForNN *Gp; //holds value of gradients for the previous layer (Gp= gradients previous)

MatrixForNN *PrevWT; //holds the values of the transpose of the previous weights

MatrixForNN *LastHiddenLayer; //holds derived output values of the last hidden layer (used for finding gradient values in this part)

MatrixForNN *TransposedL; //holds the transposed version of L (needed to find deltaW in this part)

//for this half of back propogation, there needs to be a Gp(gradients previous), which is the previous iteration's gradients (gradients to the right)-> aka,

//if looking at hidden layer->input layer, the Gp= the gradients for output -> hidden layer

//these gradients will be used to find the change in weights for this section by : deltaWeights= X_transposed*New_gradients, where X is the layer on the Left (in the case of a single layer, this is the input layer)

//loop through layer positions from last hidden layer to input layer for a single layer network, this is only one loop)

for (int index = outIndex - 1; index > 0; --index) {

Gp= new MatrixForNN(*gradients);

delete gradients; //clear current pointer to gradients, since it is going to point to a new matrix

// to get the new gradients: G= (gp* transpose(previousWeights))X LastHiddenLayer's Output

PrevWT = allWeightMatrices[index]->transposeMatrix();

//create a new matrix for gradients that has previous gradients row size, and the transposed previous weight columns

gradients = new MatrixForNN(Gp->getNumberOfRows(), PrevWT->getNumberOfColumns(), false);

//do the multiplication of gp and transposed weights

MatrixMultiplication(Gp, PrevWT, gradients);

//make a matrix for the last hidden layer's outputs (part of the equation)-> because we want outputs, we are working with derived values

LastHiddenLayer = layersOfNN[index]->calculateMatrixDerived();

//cross the new gradient matrix with the output of the last hidden layer in order to do the last part of the equation

// gradients = (gp* prevWeightsTransposed)*LastHiddenLayerOutput -> right now have the gp*PWTrans, but don't have the Last Hidden layer

for (int c = 0; c < LastHiddenLayer->getNumberOfRows(); ++c) {

grad = gradients->getOneMatrixValue(0, c)* LastHiddenLayer->getOneMatrixValue(0, c); //calculate (gp* prevWeightsTransposed)*LastHiddenLayerOutput

gradients->setMatrixValue(0, c, grad); //set new gradient value G

}

//to get the new weights for this section of back propogation, need to multiply gradient matrix by transpose of left layer's outputs

//(in this case, the input layer's, since there is only a single hidden layer)-> reusing L for this

//check to make sure that we are not in the input layer (if we are we cannot get values for the left layers (there are no more layers)

//-> basically want to stop at the first hidden layer and make L= input layer. Don't want to look for L in input layer

if (index = 1) {

L = layersOfNN[0]->calculateMatrixRaw(); //back at input layer, so want the raw values that were input

}else {

L = layersOfNN[0]->calculateMatrixActivated(); //all other layers (assuming there was more than 1 hidden layer) should be set to activated values, not raw, because it is in the hidden layers.

}

//Now that L has been updated to the proper level, to get the new weights, the transposed version of this value is needed (dW= gradient * LayerOutputsTransposed)

TransposedL = L->transposeMatrix();

//make new deltaW matrix-> reuse deltaW matrix for the weights from hiddent to input as well

deltaW = new MatrixForNN(TransposedL->getNumberOfRows(), gradients->getNumberOfColumns(), false);

//now can get the new weights via matrix multiplication

MatrixMultiplication(TransposedL, gradients, deltaW);

//now update the weights the same way that was done for the output->last hidden layer section

newWeights = new MatrixForNN(allWeightMatrices[index-1]->getNumberOfRows(),allWeightMatrices[index-1]->getNumberOfColumns(), false);

//set the new weights

for (int r = 0; r <newWeights->getNumberOfRows(); ++r) {

for (int c = 0; c < newWeights->getNumberOfColumns(); ++c) {

//set values of the new weights by taking the old weight values, delta values, learning rate, and momentum

//Note: new weight = (oldweight*momentum)-(learning rate* delta value)

oldWeight = allWeightMatrices[index-1]->getOneMatrixValue(r, c);

dVal = deltaW->getOneMatrixValue(r, c);

oldWeight = momentum * oldWeight;

dVal = rateOfLearning * dVal;

double newVal = oldWeight - dVal;

newWeights->setMatrixValue(r, c, newVal);

}

}

updatedWeights.push_back(new MatrixForNN(*newWeights));

}

//cleanup Stage

//the matrices that have been pushed into updatedWeights backwards, so this needs to be fixed

//the updatedWeights vector will be applied to the AllWeightMatrices vector in the class, so the current pointers in that vector need to be deleted before being over written

//also need to take care of deleting all Matrix pointers that were used.

//clear current weight matrices that are saved in the vector that is a member variable of NN class

for (int i = 0; i < allWeightMatrices.size(); ++i) {

delete allWeightMatrices[i];

}

//clear/ empty the vector now that all pointers were dealt with

allWeightMatrices.clear();

//reverse the order of the weight matrices in the vector so that they are going from beginning of NN to end (instead of vice versa)

std::reverse(updatedWeights.begin(), updatedWeights.end());

//Now these values can be pushed into allWeightMatrices and the pointer in UpdatedWeights can be deleted

for (int i = 0; i < updatedWeights.size(); ++i) {

allWeightMatrices.push_back(new MatrixForNN(*updatedWeights[i])); //push new matrix to AllWeightMatrices based on matrix in updatedWeights

delete updatedWeights[i];

}

delete deltaW;

delete gradients;

delete L;

delete newWeights;

delete derivedVals;

delete Gt;

}

void Ray_Tracer::ray_tracing(M3DVector3f start, M3DVector3f direct, float weight, M3DVector3f color, unsigned int depth, Basic_Primitive* prim_in)

{

m3dNormalizeVector(direct);

Basic_Primitive* prim = NULL;

if (weight < _min_weight) {

color[0] = 0.0;

color[1] = 0.0;

color[2] = 0.0;

}

else {

M3DVector3f intersect_p;

Intersect_Cond type = _scene.intersection_check(start, direct, &prim, intersect_p);

if (type != _k_miss)

{

M3DVector3f am_light;

M3DVector3f reflect_direct, refract_direct;

M3DVector3f local_color, reflect_color, refract_color;

m3dLoadVector3(local_color, 0.5, 0.5, 0.5);

_scene.get_amb_light(am_light);

prim->shade(direct, intersect_p, _scene.get_sp_light(), am_light, local_color, false);

//getting the reflection stuff

prim->get_reflect_direct(direct, intersect_p, reflect_direct);

float ws;

float wt;

float kt;

float ks;

prim->get_properties(ks, kt, ws, wt);

ray_tracing(intersect_p, reflect_direct, weight * ws, reflect_color, depth, prim_in);

if (prim->get_refract_direct(reflect_direct, intersect_p, refract_direct, 1.5, true)) {

ray_tracing(intersect_p, refract_direct, weight * wt, refract_color, depth, prim_in);

for (int k = 0; k < 3; k++)

{

color[k] = local_color[k] + ks * reflect_color[k] + kt * refract_color[k];

if (check_shadow(intersect_p)) {

if (color[k] <= 0.1) {

color[k] = 0.1;

}else{

color[k] = color[k] - 0.1;

}

}

}

}

else {

for (int k = 0; k < 3; k++) {

color[k] = local_color[k] + ks * reflect_color[k];

if (check_shadow(intersect_p)) {

if (color[k] < 0.1) {

color[k] = 0;

}

else {

color[k] = color[k] - 0.1;

}

}

}

}

}

else {

color[0] = 0.0;

color[1] = 0.0;

color[2] = 0.0;

}

}

}

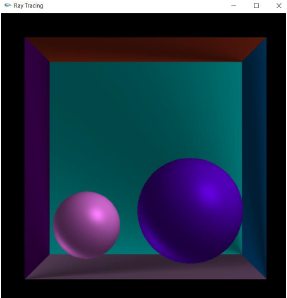

Raytracing Global Shading

RayTracing function from a graphics project. The calculations for the global shading via raytracing are achieved in this function. The result of the program with raytracing included below for reference. Written in Visual Studios. Language: C++, Additional Libraries: OpenGL

WebGL Raindrop project: Render Function

This is the rendering function for a project designed as an Honors supplement to undergraduate Computer Graphics. The overall project included overlaying a landscape with raindrops that scrolled down the screen in order to give a somewhat realistic effect without having to delve deeply into WebGL water mechanics. A screenshot of what the scene essentially looked like is included below for reference. The render function is where the mechanics for the scrolling and the rendering of the pixels were done. Written in Notepad++. Language: Javascript, Additional Libraries: WebGL

.png)

function render() {

// render to texture

//------------------------------------------------------------------------------------------------------------------------------------------

//SECTION FOR BACKGROUND

gl.useProgram(program1);

gl.clear( gl.COLOR_BUFFER_BIT );

gl.drawArrays( gl.TRIANGLES, 0, 6 );

gl.bindFramebuffer( gl.FRAMEBUFFER, null);

gl.bindTexture(gl.TEXTURE_2D, texture1);

gl.clear( gl.COLOR_BUFFER_BIT );

gl.drawArrays(gl.TRIANGLES, 0, 6);

//------------------------------------------------------------------------------------------------------------------------------------------

// SECTION FOR RAIN

//gl.useProgram(program1);

//gl.clear( gl.COLOR_BUFFER_BIT );

//gl.drawArrays( gl.TRIANGLES, 0, 6 );

gl.bindFramebuffer( gl.FRAMEBUFFER, null);

gl.bindTexture(gl.TEXTURE_2D, texture2);

gl.clear( gl.COLOR_BUFFER_BIT );

//check x

if(y >= 1.0){

y_turnback= true;

}else{

y_turnback = false;

}

//check y

if(x >= 1.0){

x_turnback= true;

}else{

x_turnback = false;

}

//manipulate y

if (!y_turnback){

y+= 0.001;

}else if(y_turnback){

y = 0.0;

x+=0.0001;;

}

//manipulate x

if(x_turnback){

x = 0.0;

}

//set texOffset

texOffset = vec2(x,y);

gl.uniform2fv( offsetLoc, texOffset );

gl.drawArrays(gl.TRIANGLES, 0, 6);

requestAnimationFrame(render);

}

using UnityEngine;

public class CharacterController : MonoBehaviour {

public GameObject bulletToRight, bulletToLeft;

Vector2 bulletPos;

public float fireRate = 0.5f;

float nextFire = 0.0f;

bool facingRight = true;

public float maxSpeed = 10f;

public Animator anim;

bool grounded = false;

public Transform groundCheck;

float groundRadius = 0.2f;

public LayerMask whatIsGround;

public float jumpForce = 700f;

bool doubleJump = false;

public AudioClip coinSound;

public AudioClip slash;

bool Death;

//***********************

public GameObject sword;

public GameObject DeathAnim;

//*************************

void Start ()

{

anim = GetComponent<Animator>();

}

void FixedUpdate ()

{

grounded = Physics2D.OverlapCircle(groundCheck.position, groundRadius, whatIsGround);

anim.SetBool("Ground", grounded);

if(grounded)

doubleJump = false;

anim.SetFloat ("vSpeed", GetComponent<Rigidbody2D>().velocity.y);

float move = Input.GetAxis ("Horizontal");

anim.SetFloat("Speed", Mathf.Abs(move));

GetComponent<Rigidbody2D>().velocity = new Vector2(move * maxSpeed, GetComponent<Rigidbody2D>().velocity.y);

if(move > 0 &&!facingRight)

Flip ();

else if(move < 0 && facingRight)

Flip ();

if (Input.GetButton("Run"))

{

maxSpeed = 3.5f;

anim.SetFloat("Running",Mathf.Abs(move));

}

else if(!Input.GetButton("Run")){

maxSpeed = 3f;

}

}

void Update()

{

if ((grounded || !doubleJump) && Input.GetKeyDown(KeyCode.Space))

{

anim.SetBool("Ground", false);

GetComponent<Rigidbody2D>().AddForce(new Vector2(0, jumpForce));

if (!doubleJump & !grounded)

doubleJump = true;

}

bool slash = Input.GetKeyDown(KeyCode.F);

if (Input.GetKeyUp(KeyCode.F) || !Input.GetKey(KeyCode.F)) {

anim.SetBool("Slashing", false);

//***********************

sword.SetActive(false);

//************************

}

else if (Input.GetKey(KeyCode.F) || Input.GetKeyDown(KeyCode.F))

{

//**********************

sword.SetActive(true);

//**********************

anim.SetBool("Slashing", true);

}

}

public void OnCollisionEnter2D(Collision2D other)

{

if (other.gameObject.tag == "Coin")

{

GameManager.Instance.CollectedCoins++;

GetComponent<AudioSource>().PlayOneNoise(coinSound);

Destroy(other.gameObject);

}

if (other.gameObject.tag == "MovingObject")

{

this.transform.parent = other.transform;

}

}

public void OnCollisionExit2D(Collision2D other)

{

if (other.gameObject.tag == "MovingObject")

{

this.transform.parent = null;

}

}

private void OnTriggerEnter2D(Collider2D col)

{

if (col.gameObject.tag == "Enemy")

{

anim.SetBool("Death", true);

}

}

void Flip()

{

facingRight = !facingRight;

Vector3 theScale = transform.localScale;

theScale.x *= -1;

transform.localScale = theScale;

}

}

Kingdom Quest Player Movement and Animation

Script for MC's movement in Kingdom Quest, one of the notable projects featured in the game design gallery. Here the jumping, slashing, and movement animations are called and corresponding movements are made to the player's position. The bulk of the player's actions are determined in this script. Designed in Unity, script written in Visual Studios. Language: C#

Local Shading with Phong Model

Local shading function for the same project as the raycasting above. The function calculates the Phong shading model's equation and adjusts color accordingly. Project with local shading only provided below as a reference. Written in Visual Studio. Language: C++, Additional Libraries: OpenGL

void Sphere::shade(M3DVector3f view,M3DVector3f intersect_p,const Light & sp_light, M3DVector3f am_light, M3DVector3f color, bool shadow)

{

//phong model: phong model equation: I = Kd*Id* l_dot_n + Ks*Is((v_dot_r)^alpha) +Ka*Ia

//vectors used in phong equ

M3DVector3f l, n, r, v;

M3DVector3f Ia, Id, Is;

//M3DVector3f phong;

//other values needed for phong

double alpha = 10;

double l_dot_n, v_dot_r;

double kd = _kd;

double ks = 0.2;

double ka = _ka;

//Temp vectors

M3DVector3f r_eq;

//set v to view, just to make it easier on me-> will probably change for computation reasons later

m3dCopyVector3(v, view);

m3dNormalizeVector(v);

//set Ia to am_light for same reason as view

m3dCopyVector3(Ia, am_light);

m3dNormalizeVector(Ia);

//find n got it from https://www.scratchapixel.com/lessons/3d-basic-rendering/minimal-ray-tracer-rendering-simple-shapes/ray-sphere-intersection

m3dSubtractVectors3(n, intersect_p, _pos);

m3dNormalizeVector(n);

_normal[0] = n[0];

_normal[1] = n[1];

_normal[2] = n[2];

//find l and Is

sp_light.get_light(l, Is);

sp_light.get_light_pos(l);

m3dNormalizeVector(l);

m3dNormalizeVector(Is);

//Find l_dot_n

l_dot_n = m3dDotProduct(l, n);

//Find r : 2 (l_dot_n)n-l

double ln = l_dot_n * 2; // 2 (l_dot_n)

for (int i = 0; i < 3; i++) {

r_eq[i] = n[i] * ln; // [2(l_dot_n)]n

}

m3dSubtractVectors3(r, r_eq, l); //[2 (l_dot_n)n]-l

m3dNormalizeVector(r);

_reflect_dir[0] = r[0];

_reflect_dir[1] = r[1];

_reflect_dir[2] = r[2];

//Find Id : kd*Is*(l_dot_n) -> from https://learnopengl.com/Lighting/Basic-Lighting

double diffuse = l_dot_n;

for (int i = 0; i < 3; i++) {

Id[i] = Is[i] * diffuse * kd; // [2(l_dot_n)]n

}

//Find v_dot_r

v_dot_r = m3dDotProduct(v, r);

//put it all together

for (int i = 0; i < 3; i++)

{

//phong model equation: I = Kd*Id* l_dot_n + Ks*Is((v_dot_r)^alpha) +Ka*Ia

double phong = kd * (Id[i]) * l_dot_n;

phong = phong + ks * (Is[i])* pow(v_dot_r, alpha);

phong = phong + ka * (Ia[i]);

if (phong < 0) { phong = phong * -1; }

color[i] = _color[i]*phong;

}

}

bool Sphere::get_refract_direct(const M3DVector3f direct, const M3DVector3f intersect_p, M3DVector3f refract_direct, float delta, bool is_in) {

//T=ηI+(ηc1−c2)N -> from https://www.scratchapixel.com/lessons/3d-basic-rendering/introduction-to-shading/reflection-refraction-fresnel

//η1 = 1, η2 = delta

//c1=cos(theta) =N dot I

//c2= sqrt(1-pow(1/detla, 2)*1-pow(c1,2));

//N= norm

//I = direction of incoming ray

//[ηr(NdotI)-sqrt(1-pow(ηr,2)*(1-pow(NdotI,2)))]-ηrI

double c1 = m3dDotProduct(_normal, direct);

if (c1 < 0) { return false; }

double c2 = sqrt(1 - (pow(1.5/delta, 2)) * (1 - (pow(c1, 2))));

_refract_dir[0] = (1.5 / delta) * (direct[0]) + (c1 * (1.5 / delta) - c2) * _normal[0];

_refract_dir[1] = (1.5 / delta) * (direct[1]) + (c1 * (1.5 / delta) - c2) * _normal[1];

_refract_dir[2] = (1.5 / delta) * (direct[2]) + (c1 * (1.5 / delta) - c2) * _normal[2];

m3dNormalizeVector(_refract_dir);

refract_direct[0] = _refract_dir[0];

refract_direct[1] = _refract_dir[1];

refract_direct[2] = _refract_dir[2];

return true;

}

module final6(A, B, C, D, out_1, out_2, out_3);

input A, B, C, D;

output out_1, out_2, out_3;

wire Anot, Bnot, Cnot, Dnot, w1, w2, w3, w4, w5, w6, w7, w8, w9, w10, w11;

assign Anot = ~A;

assign Bnot = ~B;

assign Cnot = ~C;

assign Dnot = ~D;

assign w1 = A | Bnot;

assign w2 = C | D;

assign out_1 = w1 & Cnot & w2;

assign w3 = Cnot & D;

assign w4 = B & C & D;

assign w5 = C & Dnot;

assign w6 = w3 | w4 | w5;

assign w7 = Anot | B;

assign out_2 = w6 & w7;

assign w8 = A & B;

assign w9 = w8 | C;

assign w10 = w9 & D;

assign w11 = Bnot & C;

assign out_3 = w10 | w11;

endmodule // final6

`timescale 1ns/1ps

module final6_test;

reg [3:0] in;

wire [2:0] out;

final6 uut(in[3], in[2],in[1], in[0], out[2], out[1], out[0]);

initial begin

$dumpfile("final6_test.vcd");

$dumpvars(0, final6_test);

in = 4'b0000;

repeat(15) #10 in = in + 1'b1;

end

initial begin

$monitor("Time: %4d | A: %d B: %d C: %d D: %d | out_1: %d out_2: %d out_3: %d \n", $time, in[3], in[2], in[1], in[0], out[2], out[1], out[0]);

end

endmodule // final6_test

Verilog Project

Assignment Statement Verilog project designing a virtual circuit's logic. The second half includes the Verilog testbench to test and run the module. Written in MobaXterm. Language: Verilog

Image Processing: Sobel Edge Detection Filter

This is a function that applies a filter that uses Sobel Edge Detection to modify images. Written as a filter option for a graphics image processing study. The original and the resulting images are displayed below for reference. Written in Visual Studio Code. Language: Python, Additional Libraries in the project: pillow and tkinter

_edited.jpg)

def sobelEdgeDetection(self,cb):

kern_col = 3

kern_row = 3

img_new = Image.new('RGB', (self.width, self.height), "black") # create a black image that will hold our smoothed image

pixels_new = img_new.load() # create pixel map

grey_img = ImageOps.grayscale(self.img)

grey_pix= grey_img.load()

#initialize operators (kernel matrices)

Op1 = (

[-1, -2, -1],

[0, 0, 0],

[1, 2, 1]

)

Op2 = (

[-1, 0, 1],

[-2, 0, 2],

[-1, 0, 1]

)

r_new_pixel = 0

r_pixel_v1 = 0

r_pixel_v2 = 0

for i in range(self.height -1):

for j in range (self.width - 1):

rSum1 = 0

rSum2 = 0

for k in range(-1, 1):

for l in range (-1, 1):

if( i + k < self.width and j + l< self.height ):

temp_pixel = grey_pix[i + k, j + l]

sOp1 = Op2[k+1][l+1]

sOp2 = Op1[k+1][l+1]

rSum1 = rSum1 + sOp1 * int(temp_pixel)

rSum2 = rSum2 + sOp2 * int(temp_pixel)

r_pixel_v1 = min(rSum1, 255)

r_pixel_v2 = min(rSum2, 255)

r_new_pixel = round(math.sqrt(r_pixel_v1*r_pixel_v1+r_pixel_v2*r_pixel_v2))

if( i + 1 < self.width and j + 1< self.height ):

pixels_new[i,j] = ( r_new_pixel)

# img_new.show()

print("DONE sobel edge detection")

self.img = img_new

self.pixels = self.img.load()

cb()

return ImageOps.grayscale(img_new)

#Database functionality

#######################################################

import datetime

import time

import os

import pymongo

from bson.objectid import ObjectId

client = pymongo.MongoClient("mongodb+srv://alpha-user:alpha-1234@cluster0.ohpqk.mongodb.net/todo?retryWrites=true&w=majority",

connectTimeoutMS=30000,

socketTimeoutMS=None,

# socketKeepAlive=True,

connect=False, maxPoolsize=1)

#client = pymongo.MongoClient("mongodb+srv://<username>:<password>@cluster0.ohpqk.mongodb.net/<dbname>?retryWrites=true&w=majority")

#db = client.test

db = client.todo

from bottle import get, post, request, response, template, redirect, default_app

# APPLICATION PAGES AND ROUTES

# assume collection contains fields "id", "task", "status"

@get('/not_done_items')

def get_not_done_items():

#given all of the tasks, show a table with only the ones not done

#stVal = 0

myquery = {'status': False}

result = db.task.find(myquery)

return template("not_done", rows=result, session={})

application = default_app()

if __name__ == "__main__":

#db.tasks.insert_one({'id':1, 'task':"read a book on Mongo", "status":False})

#db.tasks.insert_one({'id':2, 'task':"read a another book on PyMongo", "status":False})

print(get_show_list())

################################################################

//Database Template

//###############################################################

<html>

<head>

<title>Todo List: Unfinished Tasks</title>

<link href="https://fonts.googleapis.com/icon?family=Material+Icons" rel="stylesheet"/>

<link href="https://www.w3schools.com/w3css/4/w3.css" rel="stylesheet" >

</head>

<body>

%include("header.tpl", session=session)

<table class="w3-table w3-bordered w3-border">

%for row in rows:

<tr>

<td>

<a href="/update_task/{{row['_id']}}"><i class="material-icons">edit</i></a>

</td>

<td>

{{row['task']}}

</td>

<td>

<a href="/delete_item/{{row['_id']}}"><i class="material-icons">delete</i></a>

</td>

</tr>

%end

</table>

%include("footer.tpl", session=session)

</body>

</html>

To-Do List Database and Template

My portion of a larger project completed in a database course. This portion, however, was my personal part of the project. The overall database was a to-do list hosted online. My additional portion displayed a table of tasks that were only the ones not yet marked as completed. The second half is the HTML template of the table displayed. Written on PythonAnywhere using MongoDB for the database. Language Python (pymongo)

ROS Color Detection

Color detection file using Robot Operating System libraries. Preliminary file to virtually having robots detect color. Written in Visual Studio Code on an Ubuntu Machine. Language: Python (rospy), Additional Libraries: ROS libraries, OpenCV libraries.

#!/usr/bin/env python

from __future__ import print_function

import sys

import rospy

import rospkg

import cv2

from std_msgs.msg import String

from sensor_msgs.msg import Image

from cv_bridge import CvBridge, CvBridgeError

import numpy as np

from msvcrt import getch

#hit_pub= rospy.Publisher("Caitlyn/hit", String, queue_size=10);

#color_loc= (0,0)

#center = (0,0)

class image_converter:

def __init__(self):

self.image_pub = rospy.Publisher("image_topic_2",Image, queue_size=10)

self.bridge = CvBridge()

self.image_sub = rospy.Subscriber("image_raw",Image,self.callback)

def callback(self,data):

try:

frame = self.bridge.imgmsg_to_cv2(data, "bgr8")

except CvBridgeError as e:

print(e)

hsv = cv2.cvtColor(frame, cv2.COLOR_BGR2HSV)

# define range of blue color in HSV

lower = np.array([110,50,50])

upper = np.array([130,255,255])

# find the colors within the specified boundaries and apply

# the mask

mask = cv2.inRange(frame, lower, upper)

# Draw a rectangle around the faces

for (x, y, w, h) in mask:

cv2.rectangle(frame, (x, y), (x+w, y+h), (255, 0, 0), 2)

global color_loc

color_loc = ((x+w/2), (y+h/2))

#------------------------USE Cascades-------------------------------------------------

output = cv2.bitwise_and(frame, frame, mask = mask)

cv2.imshow("Image window", frame)

cv2.waitKey(3)

try:

self.image_pub.publish(self.bridge.cv2_to_imgmsg(frame, "bgr8"))

except CvBridgeError as e:

print(e)

def main(args):

ic = image_converter()

rospy.init_node('image_converter', anonymous=True)

#if(getch() == 's', && color == center):

#hit_pub.publish("Target hit!")

#elif (getch() == 's' && color_loc != frame_center)

#hit_pub.publish("Sorry. Nothing was hit.")

try:

rospy.spin()

#except KeyboardInterrupt:

# print("Shutting down")

cv2.destroyAllWindows()

if __name__ == '__main__':

main(sys.argv)

void FinalProject::setRayCast(Ogre::SceneNode* lightNode){

Ogre::RaySceneQuery *mRaySceneQuery;

mRaySceneQuery = mSceneMgr->createRayQuery(Ogre::Ray());

mRaySceneQuery->setSortByDistance(true);

Ogre::Vector3 position = lightNode->_getDerivedPosition();

Ogre::Vector3 norm= spotLight->getDerivedDirection();

// create the ray to test

Ogre::Ray centerRay(position, norm);

mRaySceneQuery->setRay(centerRay);

Ogre::RaySceneQueryResult& result = mRaySceneQuery->execute();

Ogre::RaySceneQueryResult::iterator itr = result.begin();

//iterate through the results

for ( itr = result.begin(); itr != result.end(); itr++ )

{

if ( itr->movable )

{

if (itr->movable->getQueryFlags() == 5) {

OgreHead* target = Ogre::any_cast<OgreHead*>( itr->movable->getUserAny() ); //getParentSceneNode();

//got target, so now assign to key, after checking that it has not been assigned to any othe

if(!target->getDeath()){

activeOgres.push_back(target);

lightOn = false;

target->setBool(true);

target->getEntity()->setVisible(true);

goto endloop; //only want the first one it hit, and since I sorted by distance,

//the closest ogre is the first movable object that is an ogre

}

}

}

}

endloop:;

}

Penguin Run Raycast Query

This is a crucial function from Penguin Run, a game that I developed using Ogre Game Engine. A screenshot of the game is available to see in the Game Development Gallery. This function runs the raycast of the 'flashlight' feature of the game that allows for hidden monsters to be seen and defeated for extra points. The raycasting was one of the most challenging parts of the project, but definitely added a little more excitement to the game. Written in Visual Studios. Language: C++, Additional Libraries: Ogre Game Engine Libraries.